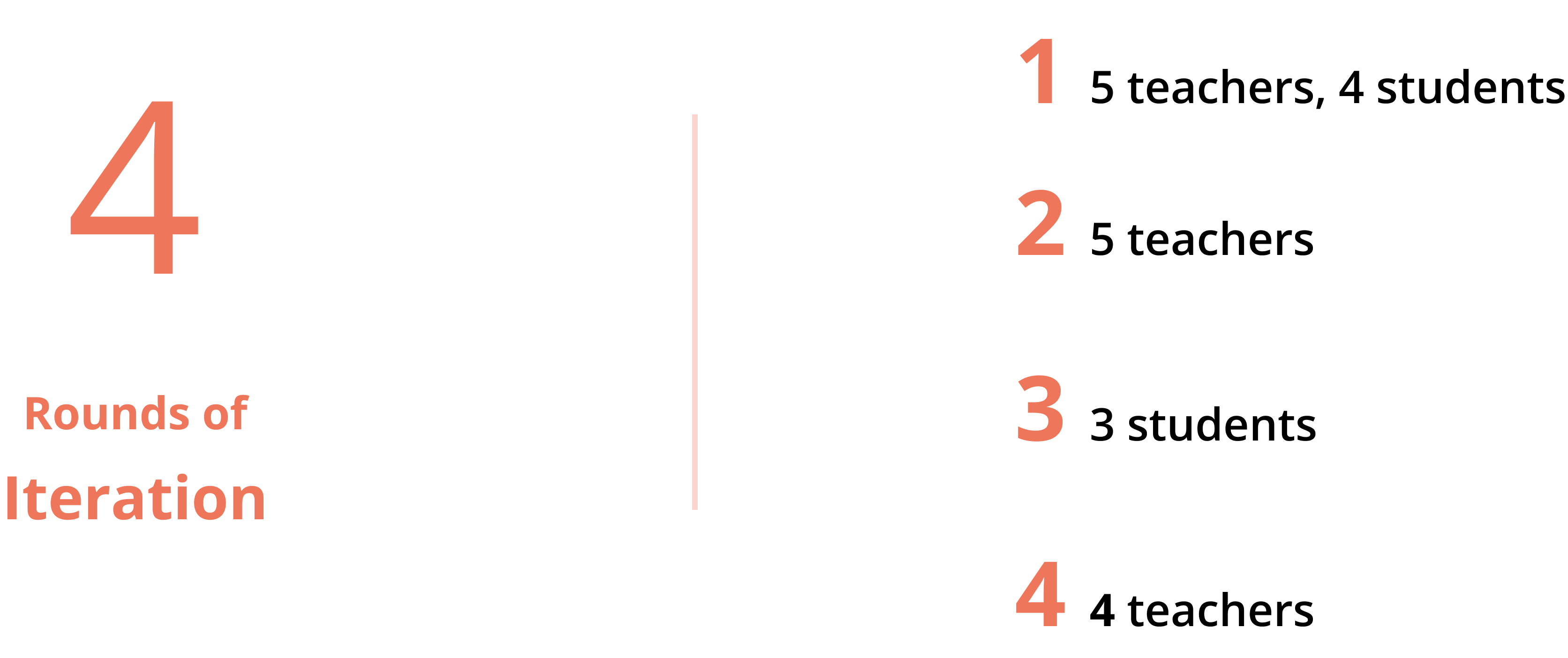

Overview

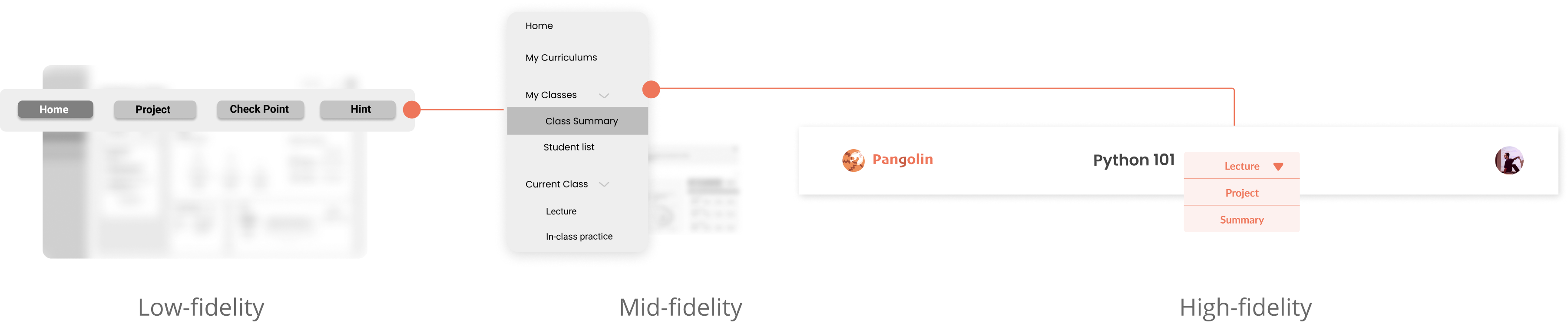

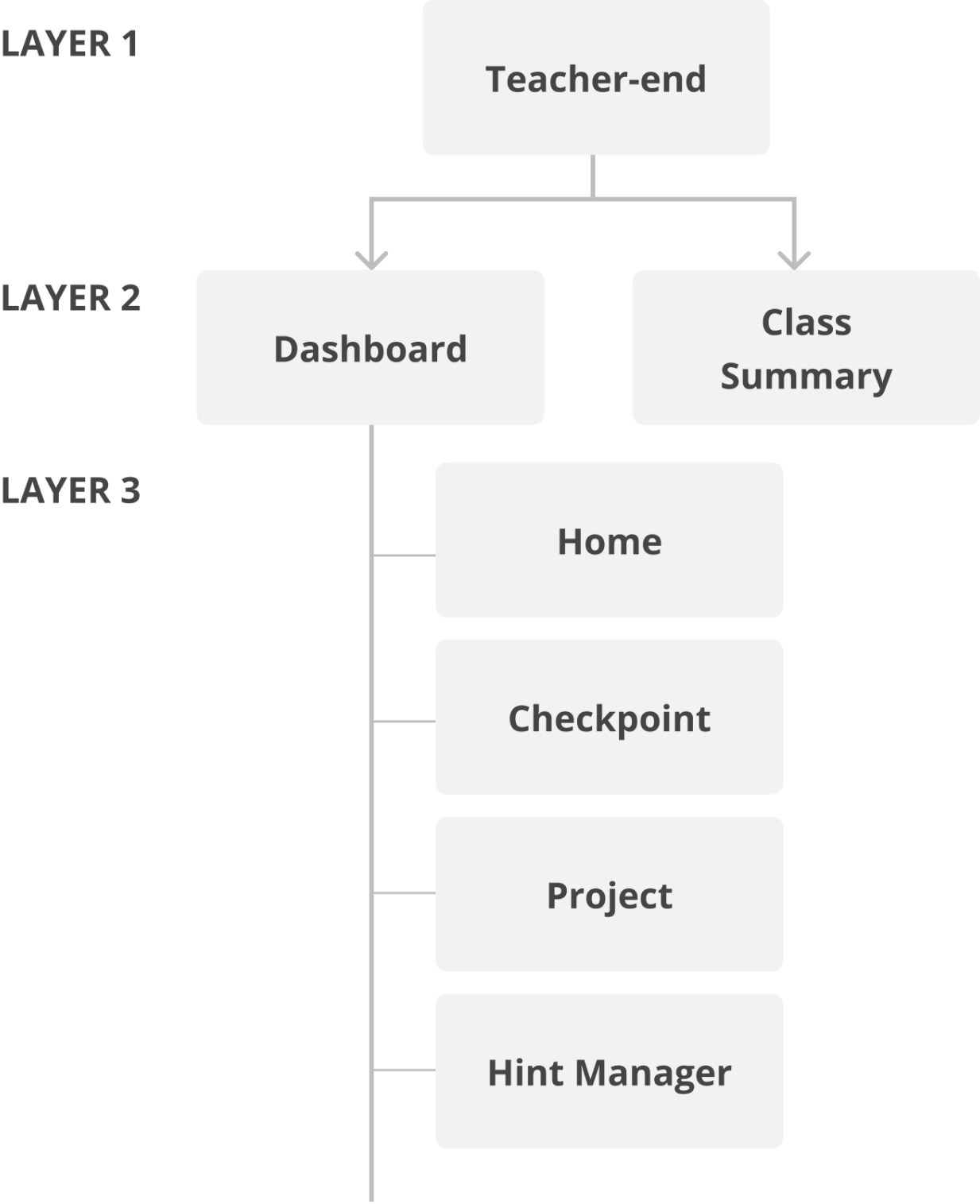

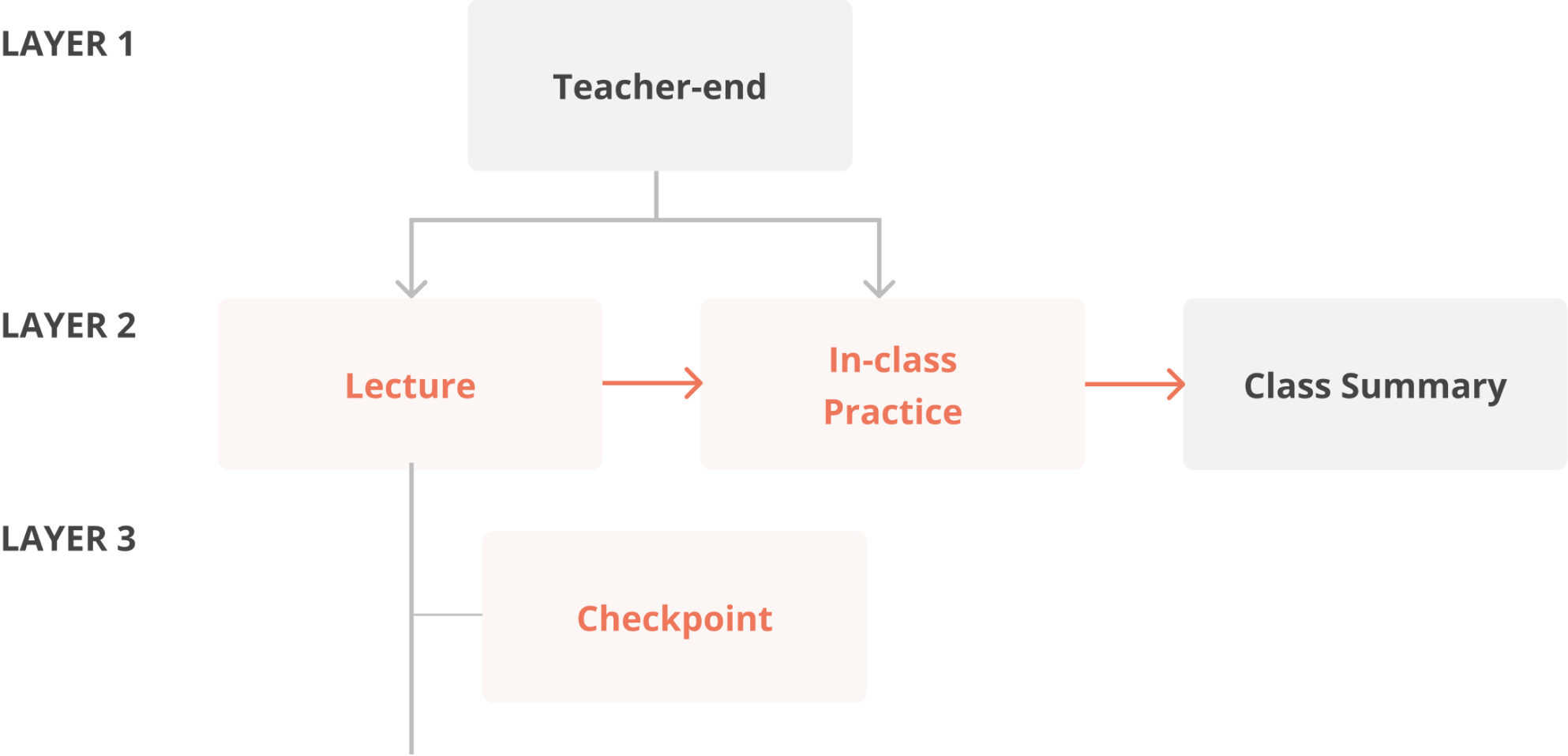

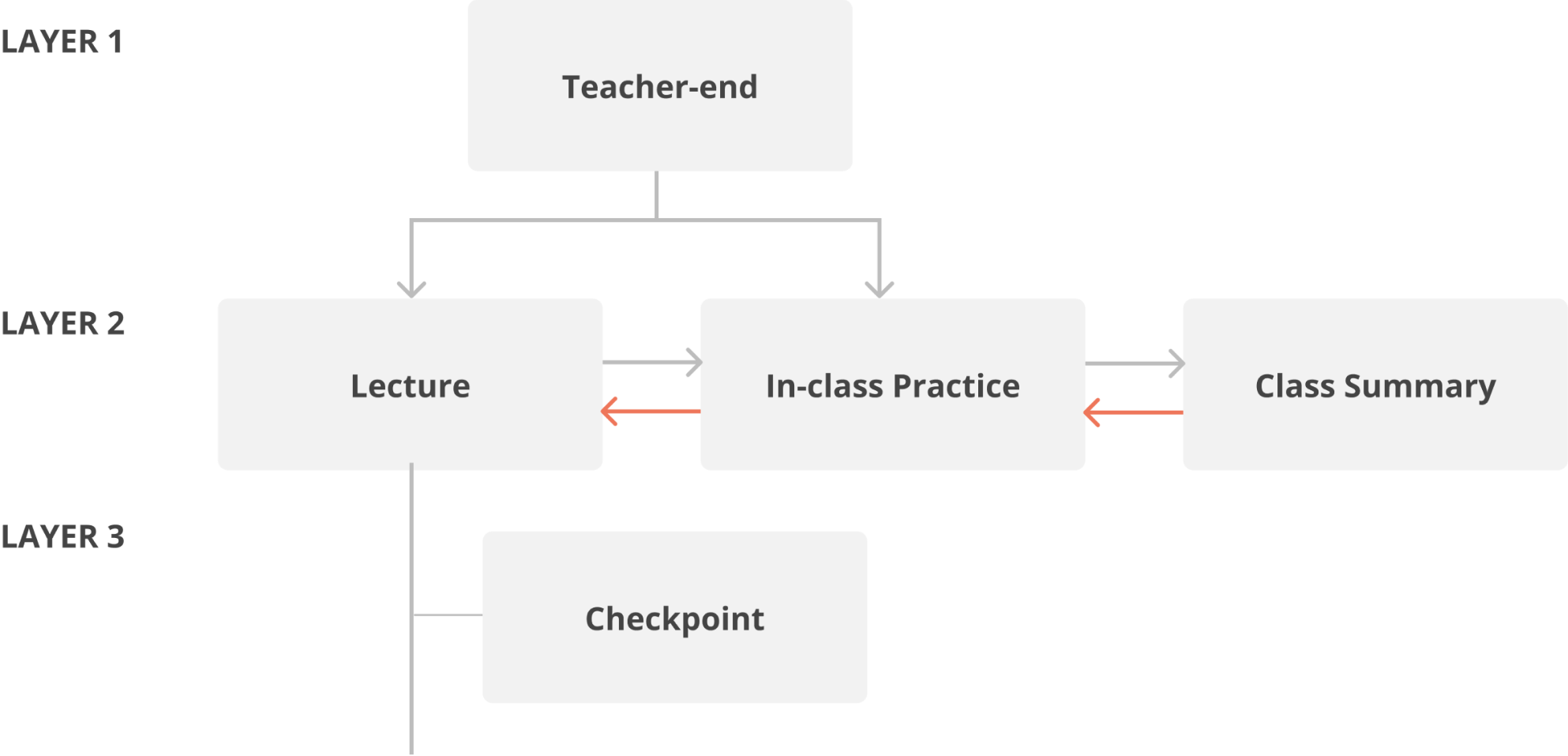

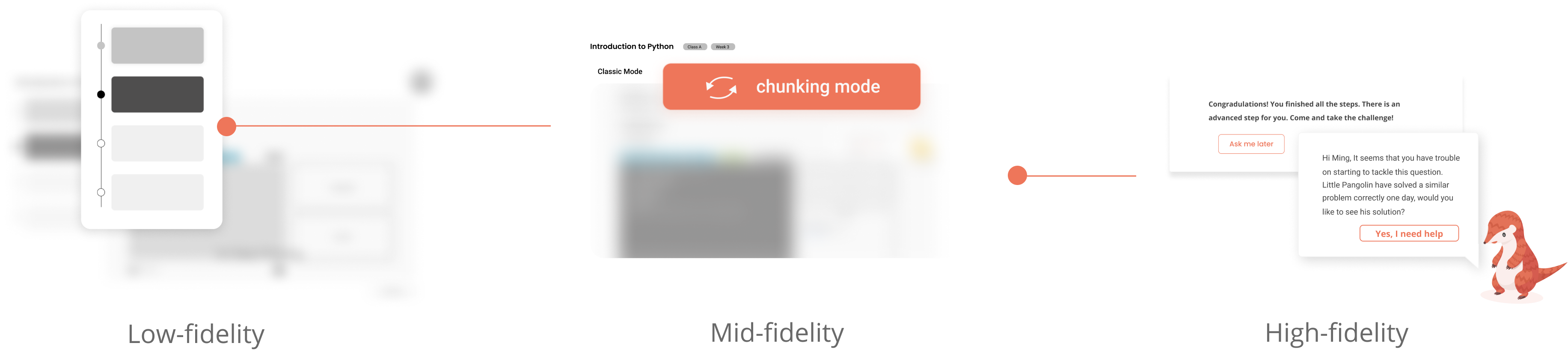

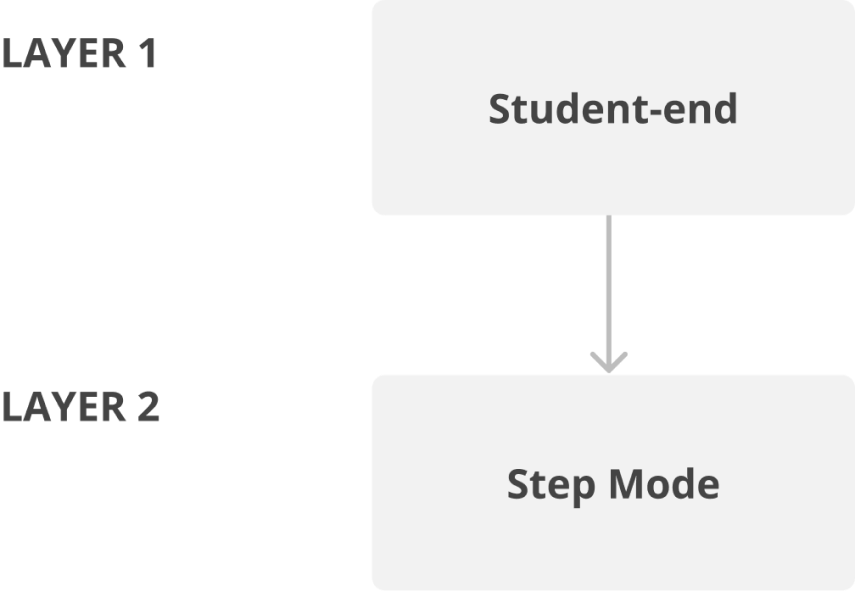

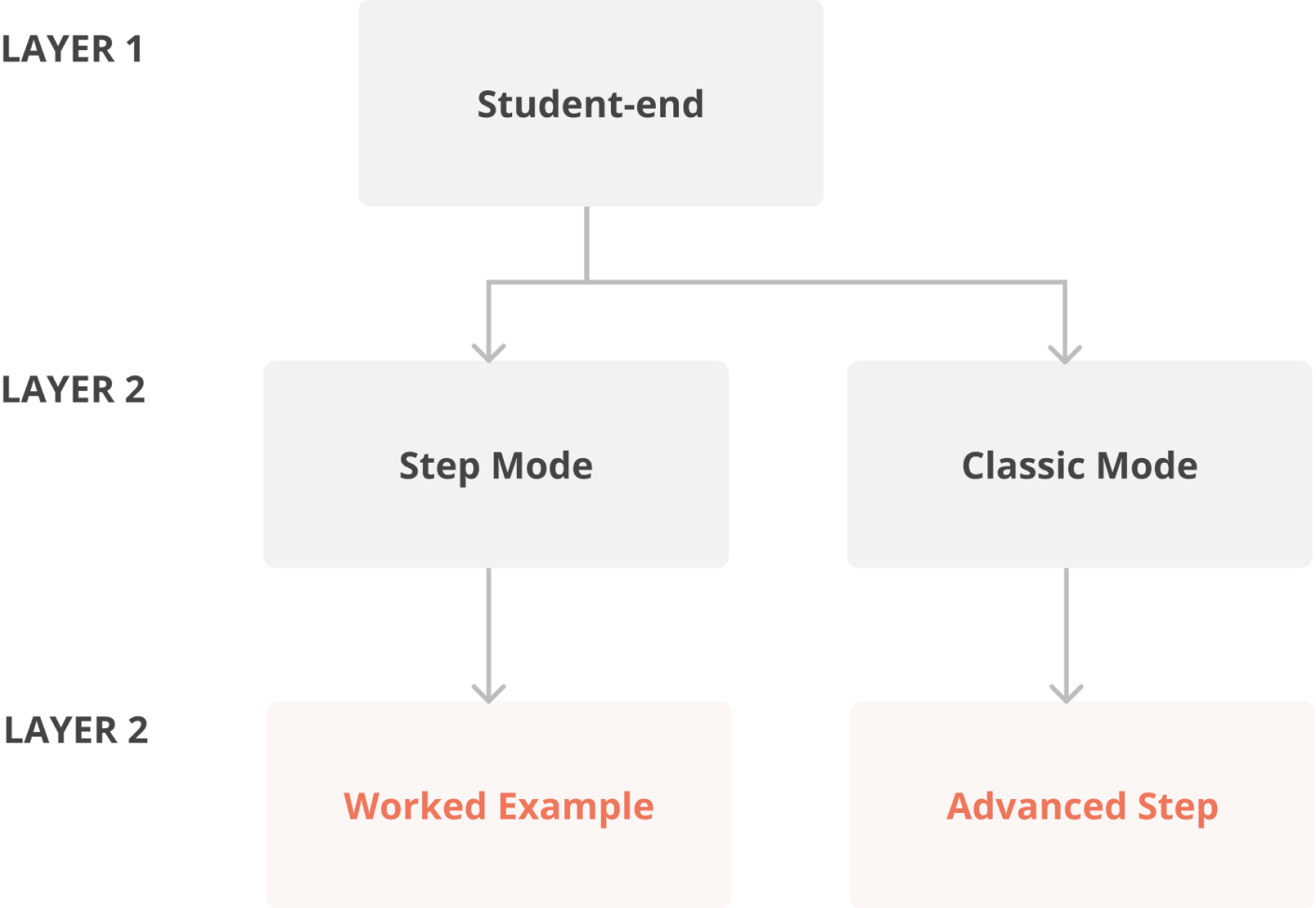

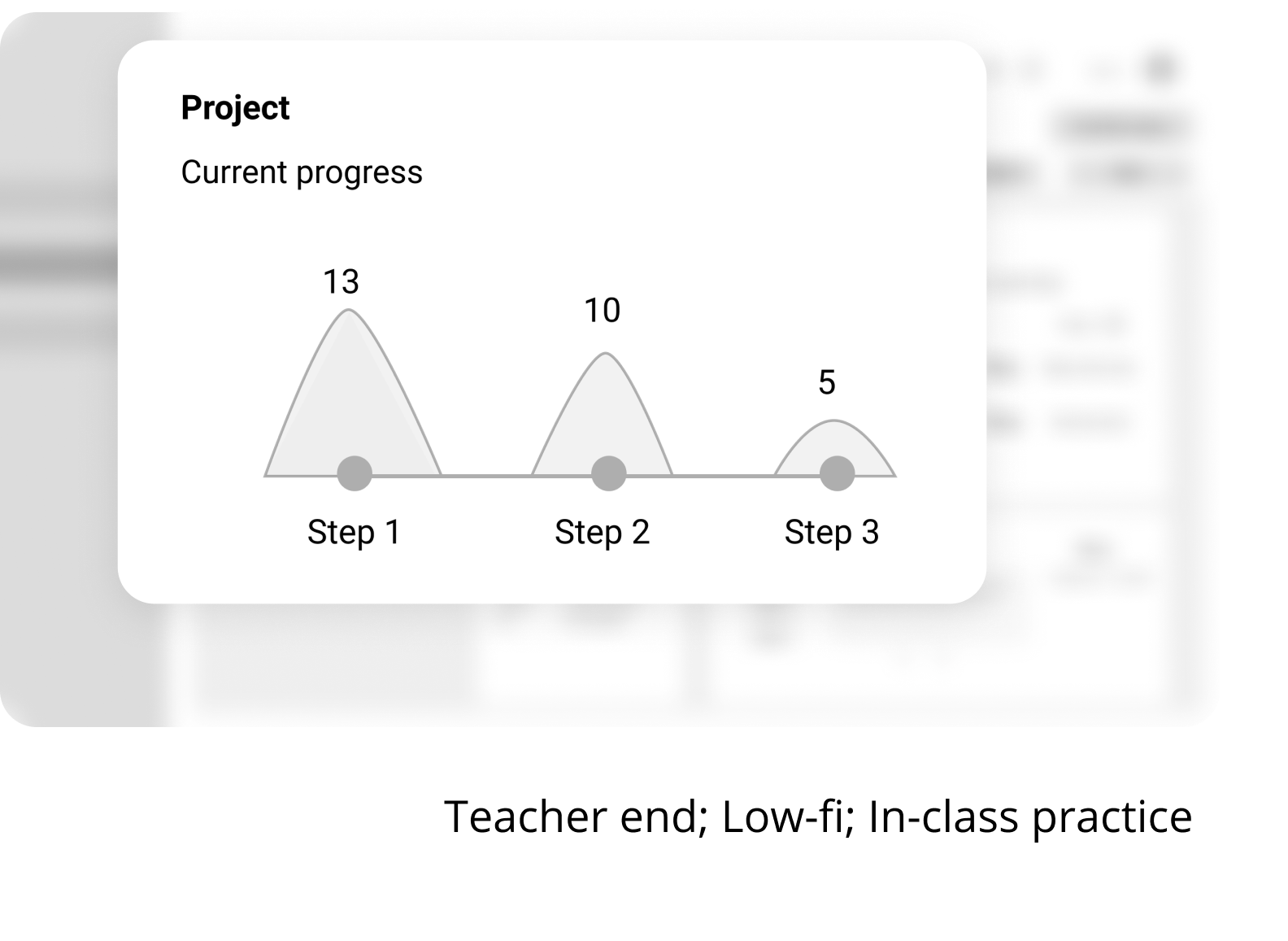

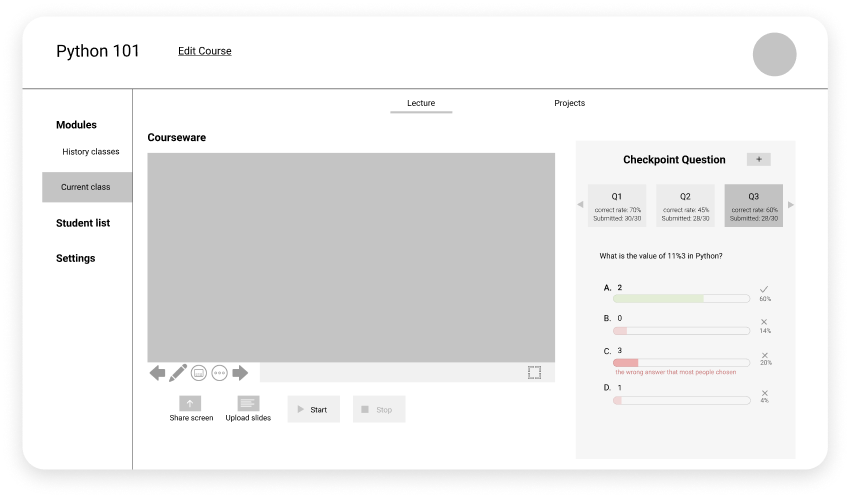

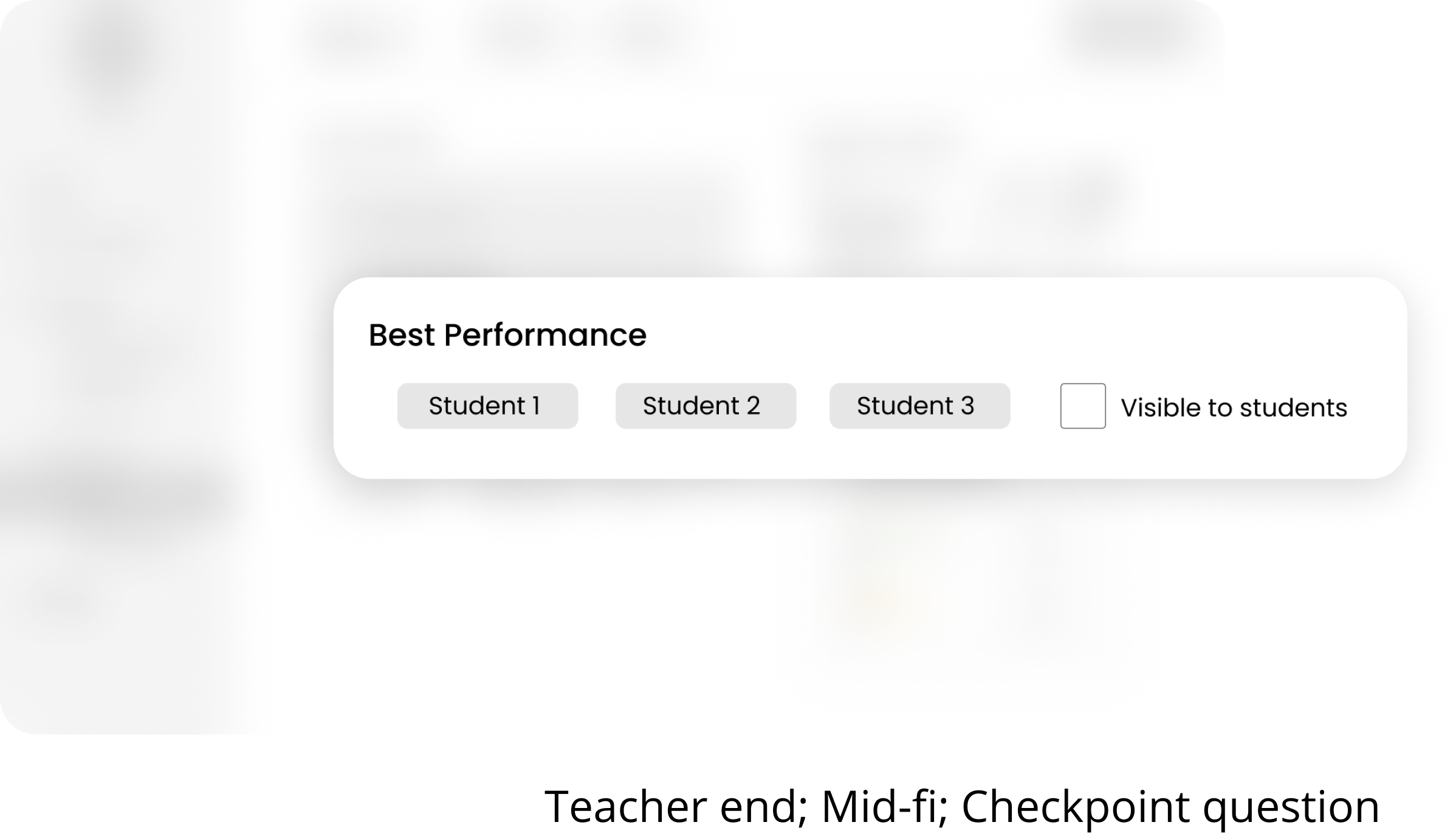

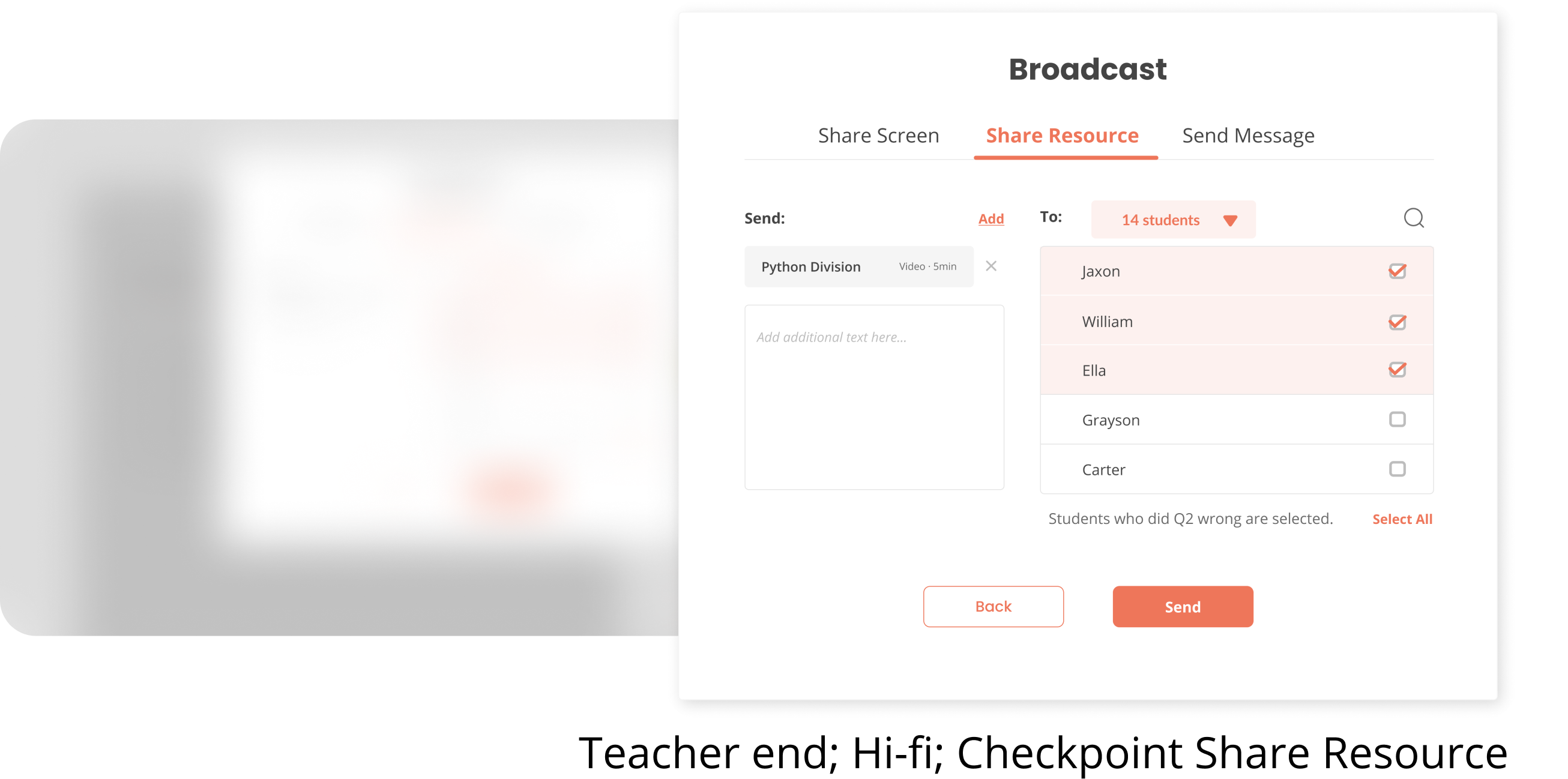

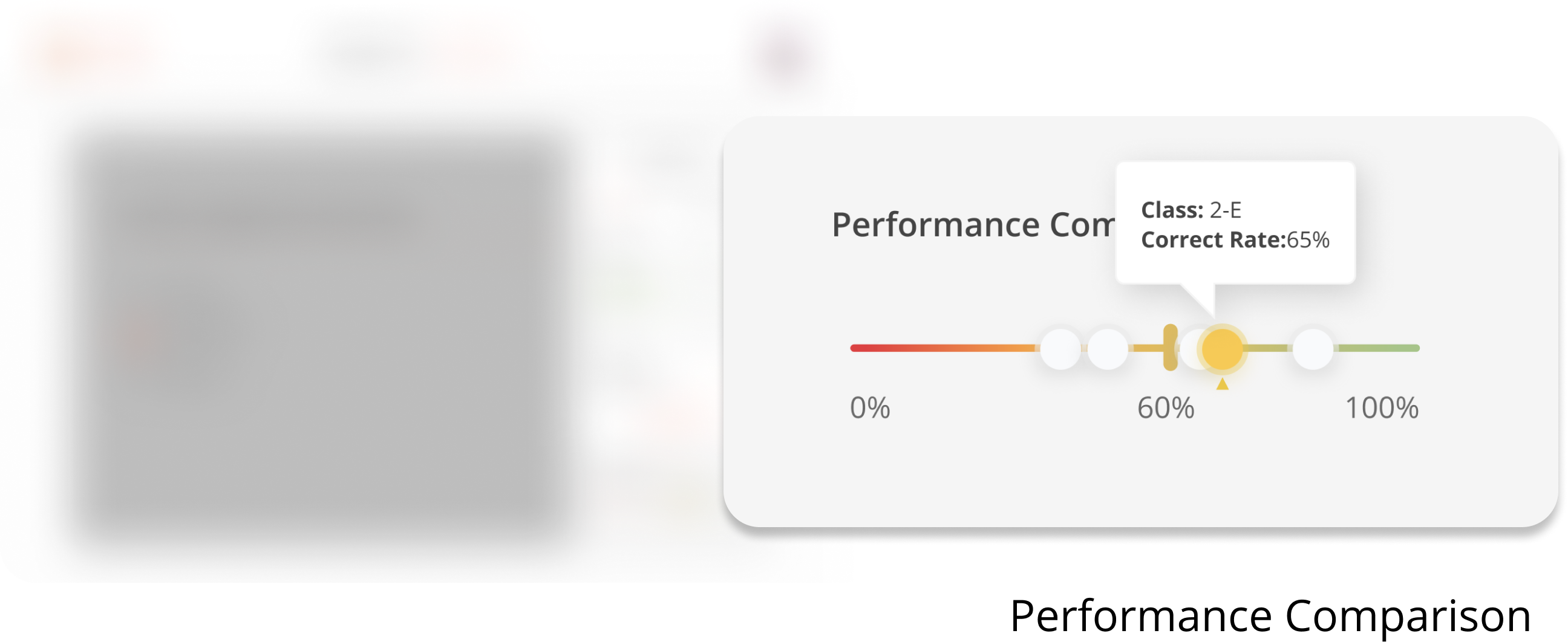

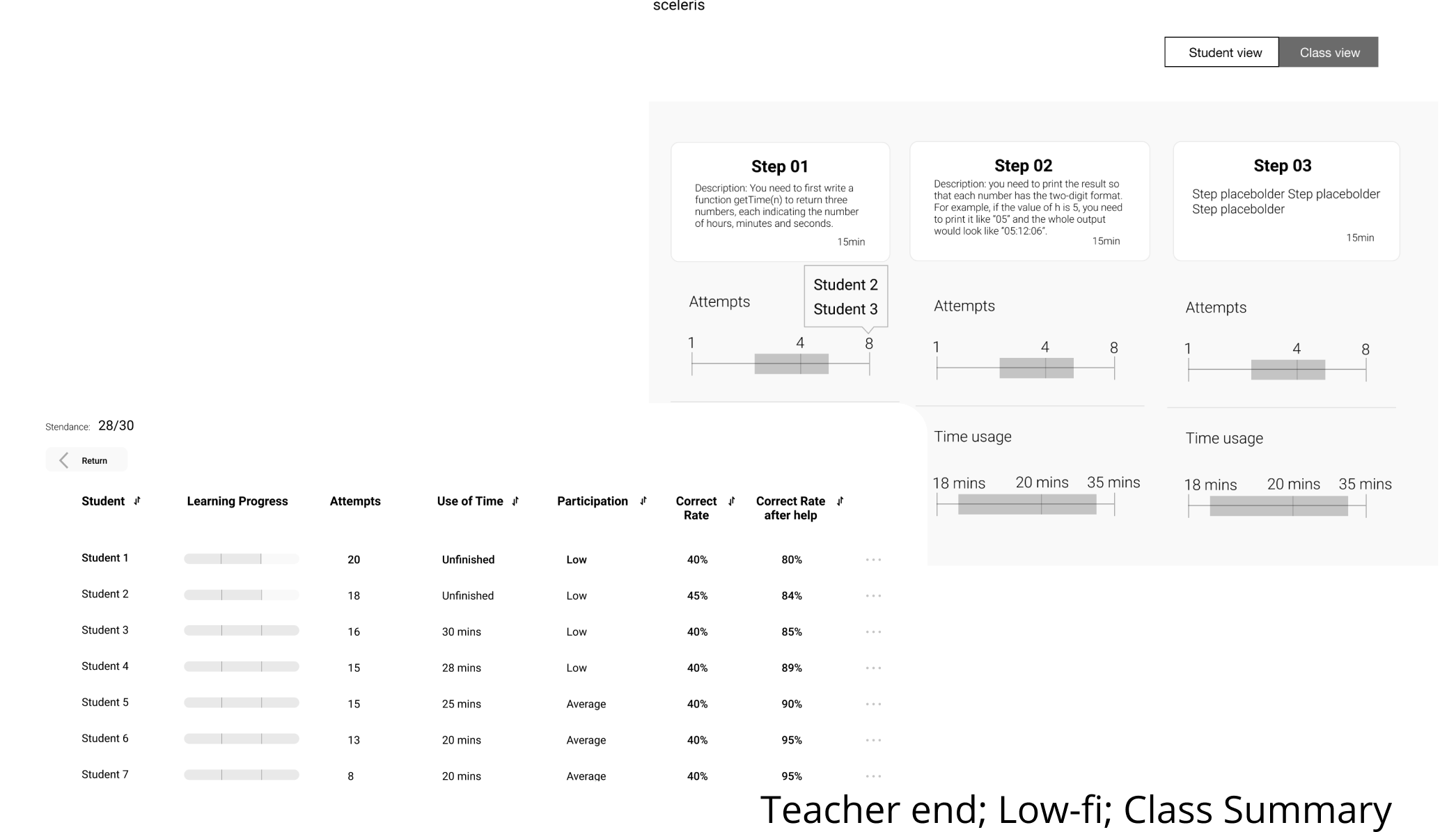

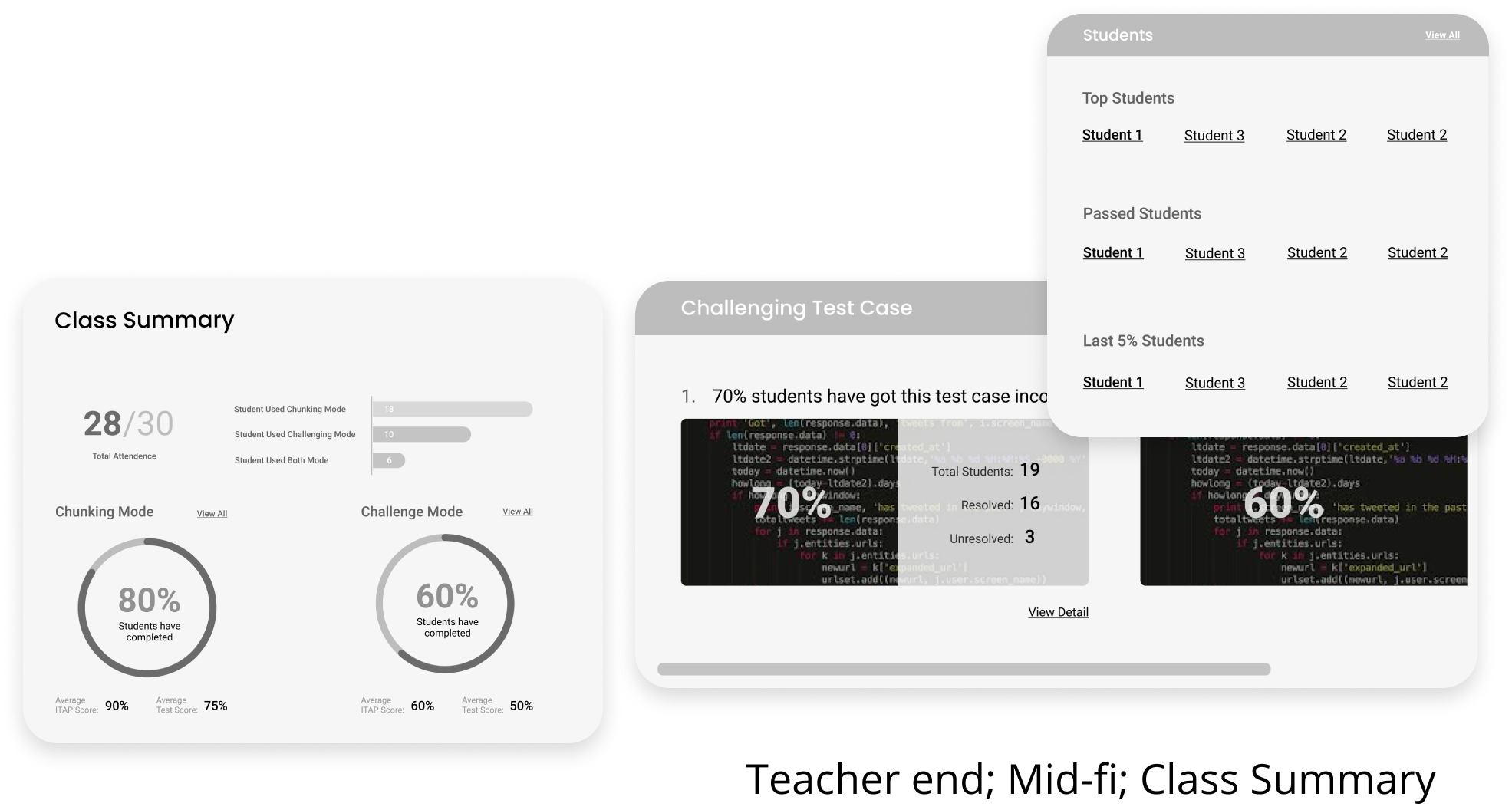

From May to August, we ran through 4 rounds of iterations. In the first round, we interviewed both teachers and students, tested and updated the information architecture of the whole product. From 2nd round, we focused more on three core features on the teacher end: Checkpoints, Project and Class Summary. In the following session, we will talk about our iterations in product structure, features, and metrics.